AWS Lambda Cold Start Language Comparisons, 2019 edition ☃️

It’s 2019, the worlds a changing place but the biggest question of them all is how have lambdas performance changed since 2018? I’m here again to compare the cold start time of competing languages on the AWS platform. And with the recent addition of Ruby, combined with the 2018 results this should be an interesting analysis! See my Apr 2018 Language Comparison. Without spoiling the results, the improvements the AWS team made are exciting.

Cold Start?

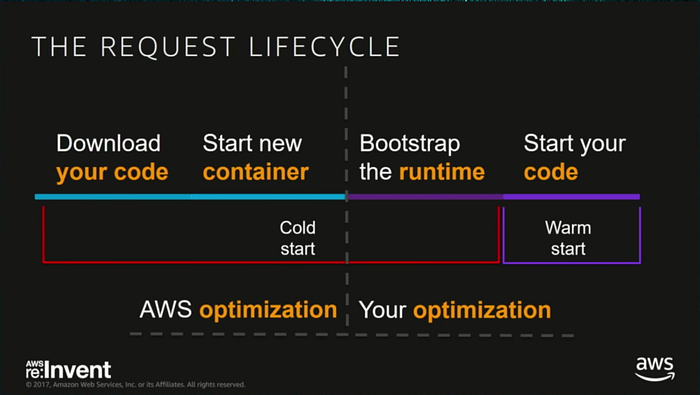

The world of Serverless computing dictates that functions are run on demand and are thrown away when not needed. All these efficiencies of running the function only when needed, leads to a phenomenon called cold starts.

A cold start is the first time your code has been executed in a while (5–25minutes). This means it requires to be downloaded, containerised, booted and primed to be run. And this process adds significant latency between ~ 20–60 times worse performance. The length of the cold start depends on several variables, the language/runtime, the amount of resources (mb) dedicated to the function & the packages/dependencies pulled into run the function.

The cold start time, is important as despite being an edge case. Every-time you’re hit by a spike of traffic, ever lambda function is facing a potential cold start! For more information check out a previous post, that explains cold starts further.

Methodology

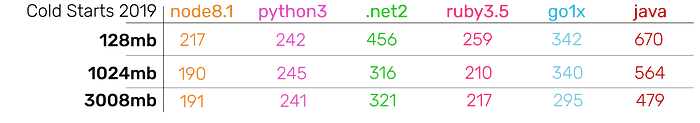

I tested the following languages: Go 1.x, Node.js 8.10, Java 8, Ruby 2.5, Python 3.6 & .netcore 2.1. I created three functions respectively for each language. The function did nothing except emit ‘hello world’. Each language had a function which was assigned 128mb, 1024mb & 3008mb of memory. I then created a step function, to trigger all 18 functions. I manually collected the results from AWS x-ray on a periodic basis.

Results

I collected both the cold start times and a new variable AWS x-ray introduced called initialisation which measures the environment setup time.

Below is the Cold Starts graph, which is segmented into three sections: functions run with 128mb, 1024mb & 3008mb of memory.

The same general trends of 2018 emerge, increasing the resources assigned to each language decreases start time and languages which are compiled are slower.

But surprisingly unlike in 2018 NodeJs is the big winner, with Ruby then Python lagging slightly behind. In 2018 Python dominated which I hypothesised was due to the fact Lambda’s themselves run in a python environment. Which would give python a significant advantage as no runtime was required to boot up. It’s interesting to see the other runtimes optimised, to the point that they can compete with Python.

Above is the average initialisation time, for each language runtime. Again it’s interesting to see NodeJS compete on the same level as python, possibly the AWS team has built a seperate function handler optimised for just NodeJS functions?

The average cold start times visualisation, helps illustrate that most languages now perform strongly. They also all seem to be more independent of the memory assigned to them unlike in 2018. With the exception of Java & .Net. I wonder if this means that the engineering team at AWS is hitting the upper bounds of serverless efficiency.

What would happen if I reran these experiments in 2020 would we see any more significant improvement?

The improvement over the last 16 months is the most astounding and surprising find. There are massive improvements across the board, with cold starts becoming reasonably inexpensive and non consequential.

NodeJS is now the most performant language, improving 74.6% or 638ms in just 16 months.

It is really a testament to the engineering team at AWS. Not only do they build great products, but they don’t forget about them. I’m looking forward to seeing how far these efficiencies can be taken!

Warm functions

Just as a last remark I wanted to leave some statistics on warm functions. This way we can appreciate how fast lambda functions perform majority of the time.

When properly warmed lambda functions are extremely performant across the board, no matter the language!