Member-only story

Building Agentic RAG Application with DeepSeek R1 — A Step-by-Step Guide [Part 1]

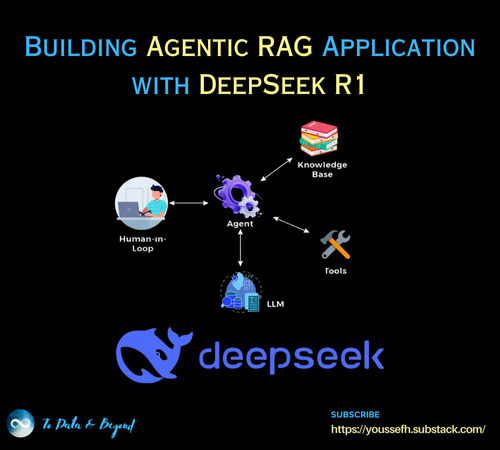

Building Agentic RAG Application with LangChain, CrewAI, Ollama & DeepSeek

While Retrieval-Augmented Generation (RAG) dominated 2023, agentic workflows are driving massive progress in 2024 and 2025. The usage of AI agents opens up new possibilities for building more powerful, robust, and versatile Large Language Model(LLM)-powered applications.

One possibility is enhancing RAG pipelines with AI agents in agentic RAG pipelines. This article guides you through a step-by-step guide to building an agentic RAG application using the DeepSeek R1 model.

The first part of this two-part article will introduce you to the Agentic RAG workflow and the main components of our application. Then we will start the implementation by setting up the working environment and installing the LLM locally with Ollama. Then we will define the tools and th agents we will use.

In part 2 we will continue building the workflow by defining the agentic workflow tasks and then wrap up the whole agentic RAG workflow and put it into action and testing it with different queries.

Table of Contents:

- Agentic RAG Pipeline