Deep-Dive into TCP Connection Establishment Process

With lots of links to the Linux kernel TCP stack

While working on the Redis Internals series, I decided to take a quick look at TCP implementation in the latest commit of the Linux kernel master branch. Who knew it would be a week-long detour, but boy was it worth it!

Let’s get right into it.

Overview: the Three-Way Handshake and All of His Friends

Here, I start with the basics, and then narrow down my focus and proceed to a connection establishment specifically.

What is a TCP socket?

It’s just a programming construct used in the Linux kernel source code. It’s an abstraction over how Linux communicates with other hosts distributed over a network.

Client-server communication: a client’s view

When an application wants to exchange data with a server, first it should establish a connection. As a result, Linux kernel creates a socket containing all the information needed to send requests in a reliable way, and returns its id to an application. Then, an application can send a request using this socket. When it does so, an underlying Linux TCP stack temporarily saves this request in a socket; it’s actually sent over a network a few moments later. This process is referred to as “write in a socket”.

Client-server communication: a server’s view

A server must create at least one listening socket to be able to accept client connections. When a client sends a tcp connection request, server’s listening socket reads it and the Linux kernel creates a new socket, specifically for further data exchange with this client. This newly created socket is called a connection socket. A server application source code must have access to its identifier, or a descriptor — to be able to read a specific client’s requests and write it responses. In order to do that, a server accepts a connection request, issuing a specific command. When it’s done, a data exchange becomes possible.

When a server receives a request, server-side Linux TCP stack stores it in a socket until an application reads it. That’s why a process of receiving data is often called “reading from a socket”.

Connection establishment

The process of connection creation in TCP is called the three-way handshake. It has three stages. First, a client sends a SYN packet. Server receives it and creates a data structure typically referred to as a connection request, or mini socket. It has an information that the future connection socket will need, so it has to store it until a connection is fully established. After this, a server sends a confirmation that a syn packet was processed. It’s sent in a syn-ack packet. When a client receives it, it finally sends the last packet, an ack. Server Linux kernel then creates a connected socket waiting for a server application to obtain, or accept, it.

TCP segments, sequence numbers, and acknowledgements

TCP stack receives a stream of bytes and converts them into chunks known as TCP segments. It’s size is agreed upon during a connection establishment. It’s known as MSS: maximum segment size. This name is misleading: it denotes the maximum size of tcp segments’ payload that a current connection will use. That is, it excludes tcp and ip headers size. What’s important here, a sending part can suggest its own value in a syn packet, but ultimately it’s up to a receiving part what value to select. Generally, it doesn’t choose a value greater than what a client proposed. This value is then communicated back to a sending part in a syn-ack packet.

Each tcp segment contains a sequence number of the first byte of data in current segment, within a current connection. It’s called just a sequence number, for short. But it doesn’t start with zero. In this case, an attacker could guess it and send counterfeit packets. That’s why it’s chosen randomly by both sides during the three-way handshake. Each side generates its own sequence number, sends it to a counterpart, and waits for an acknowledgment:

When a host receives a sequence number generated by the other host, it simply increments it and sends back. Thus, sequence number validation is trivial for an original sender.

After a three-way handshake process completed successfully, data exchange looks like that:

Queues

When a syn packet arrives, server-side Linux kernel creates a connection request and saves it in a way historically referred to as a queue; you can stumble upon it elsewhere by the names of “half-open connection queue”, an “incomplete connection queue”, a “SYN queue”, “connection request queue”, or “request socket queue”. The last one owns its name to a structure which represents a connection request, a request_sock:

After an ack packet has arrived, connection request is removed from a connection request queue and a connection socket is created. It’s put in another queue which is known by a variety of names: accept queue, backlog queue, pending connection queue, or connection backlog. When a server application “accepts” a connection (using some command that boils down to an accept system call), it simply dequeues an existing socket from an accept queue.

You’ll see a more detailed explanation of this process below.

SYN-flood DDoS attack

What happens if lots of clients send a syn packet, but don’t send an ack packet? In previous Linux versions, half-open connections were added in an accept queue. This caused problems to legitimate connections, since an accept queue is home for not yet accepted connection sockets.

In newer Linux versions, there is a separate storage for connection requests. But server resources can get exhausted anyway — Linux kernel has to store all those connection requests until an ack packet arrives, which, in case this attack is carried out, never happens.

But there is a workaround.

Syncookies

Actually, almost all of the data a connection request contains can be obtained on an ack-processing step. An exception for this is an mss value sent by an initiating host: it sends it only in a syn packet. Frankly, I don’t think that there is some fundamental principle violated if an initiating host sends an mss in an ack packet. For me, it looks more of a TCP design flaw.

However, TCP does the following. When a receiving host processes a syn packet, it extracts an mss value, clamps it to a user-defined value if set, and encodes it in a sequence number which is sent back to a sending host in a syn-ack packet. Sending host acknowledges a syn-ack packet just the same way: it increments receiving host’s sequence number and sends it in an ack packet. Receiving host extracts an mss value from it and calculates a final result.

This mechanism can be either totally disabled, or enabled only when a connection request queue is full, or always used, regardless of connection request queue state.

Now, let’s dive into the source code.

The Three-Way Handshake with no Syncookies

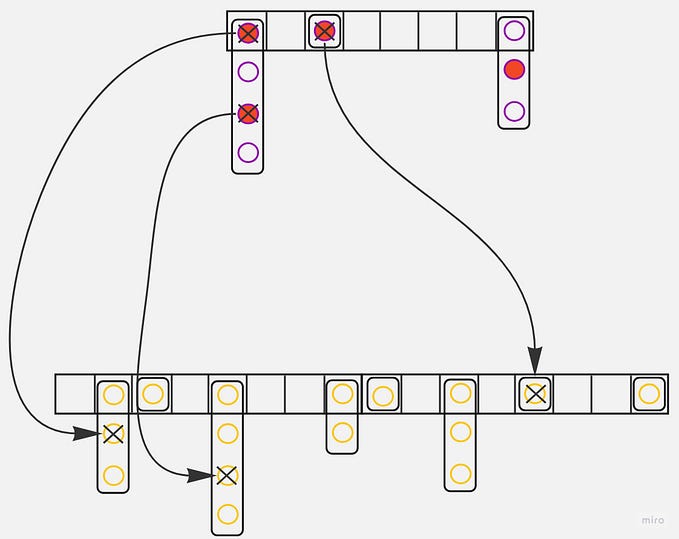

First, here is an image again of what a three-way handshake looks like when syncookies are not used:

Let’s consider it step by step.

Receiving host receives a syn packet

When a client initiates a 3wh, it sends a syn packet to the server. There,

the TCP layer’s entrypoint is a tcp_v4_rcv procedure. When a packet is received, a listening socket is looked up. If it’s found, its state is TCP_LISTEN. Through a tcp_v4_do_rcv() helper procedure, we reach tcp_rcv_state_process(). This procedure is effectively a state machine applied to a socket that processes a current packet: it first checks a socket state, then runs some logic based on it, and advances the state accordingly. There, I fall into a clause indicating that it’s a syn packet processing stage. Then, through a tcp_v4_conn_request() procedure, I find myself in tcp_conn_request(). I’m interested in a case when syncookies are not used, but normal operation is possible. This boils down to a single case when syncookies are either disabled or have a default value of 1, and a request socket queue is not full (and given a listening socket is not in a TIME_WAIT state).

As a side note, when tcp_syncookies is 2, then syncookies are always used, even when request socket queue is not full.

A request socket is created. As I mentioned above, request socket is not a full-blown connection socket used for data exchanging, instead it’s a connection request, or a mini socket — as it’s typically referred to in Linux source code. Its ireq_state is TCP_NEW_SYN_RECV, a listener socket is attached to it and a syn packet saved. Finally, a request_sock is cast to a sock struct and is added in a “queue”. Well, actually it’s not a queue. It’s a mechanism consisting of two parts:

- a hash-table, usually referred to as an ehash, which is used for socket lookups;

- and, however crazy, an accept queue tracking its length through the

qlenfield. To make things more confusing, an accept queue is represented as a struct with arequest_sock_queuename.

This “queue” is often referred to as a half-open connection queue, or a SYN queue — as I mentioned above. Here we can see its length incrementing when a new connection request is added.

And finally, a syn-ack is sent.

So, for now, no socket is created. Only a mini socket is created, which is a request_sock struct disguised as a normal socket represented as a sock structure.

Receiving host receives an ack packet

As during a syn packet processing step, a socket is looked up. In an __inet_lookup procedure, __inet_lookup_established is issued first. It looks up a socket in an ehash. And there is one indeed — a temporary request_sock cast to a sock struct which was added during a syn packet processing stage. Its state is still TCP_NEW_SYN_RECV, so we fall through a sk->sk_state == TCP_NEW_SYN_RECV clause. Then, a tcp_check_req invoked there creates a child socket by cloning a listening socket. This newly created socket is in a TCP_SYN_RECV state. It’s added in an ehash, so __inet_lookup_skb will find it when a client starts exchanging data. Right after this normal socket was added, a mini socket is removed from an ehash — it’s not needed there anymore.

Then, a socket is added in a tail of an accept queue and its length incremented. From an implementation point of view, it’s not a socket actually. Instead, it’s the same request_sock created on a syn stage, but it now stores a child socket created earlier. It’s a funny asymmetry: request queue conceptually has connection requests, although technically it contains sockets (not to mention it’s not a queue whatsoever), while an accept queue conceptually contains sockets, although technically it consists of connection requests. The length of an accept queue is tracked through a sk_ack_backlog field of a listening socket.

Finally, a tcp_child_process is called. It calls an already familiar tcp_rcv_state_process, but this time for a newly created socket. Its state is TCP_SYN_RECV, and it’s set to TCP_ESTABLISHED. It’s worth noting that each socket arriving in an accept queue is in an TCP_SYN_RECV state; it becomes established a few moments later.

The Three-Way Handshake with Syncookies

I won’t delve into the same level of detail as in a previous section — much of an implementation remains the same. I only highlight the differences between a scenario when syncookies are used and when they’re not.

First, here is a flow:

Receiving host receives a syn packet

As on a previous step, tcp_rcv_state_process is invoked through tcp_v4_rcv entrypoint and tcp_v4_do_rcv helper. There, in a tcp_conn_request procedure, we read sysctl_tcp_syncookies setting. It’s 1 by default. Syncookies mechanism is used if it’s 2 or if a connection queue is full, given a listening socket is not in a TIME_WAIT state, namely a tcp_tw_isn field is empty.

Then, a syncookie is generated. In case of tcp, cookie_v4_init_sequence procedure does that. Syncookie is a sum of the following values:

- two hashes of addresses and ports of a source and a destination;

- Linux internal representation of current time;

- a clamped mss value and a sequence number sent by an initiating host.

Then it’s all sent to a client in a seq field of a syn-ack packet.

Although a request socket was created as part of an implementation, it’s not put in a connection request queue. Instead, it’s disposed of.

Receiving host receives an ack packet

As always, everything starts with a tcp_v4_rcv procedure. Since there is no connection request in an ehash, only a listening socket can be found. It’s in a TCP_LISTEN state, so we find ourselves in tcp_v4_do_rcv. There, a syncookies mechanics it started. First, a syncookie value is validated:

- It’s checked that a syncookie must be less than 120 seconds old;

- Then, an mss value is extracted and returned. If this value is in a range from 0 to 3, then syncookie is considered valid.

If everything is ok, then a socket is created. More precisely, it’s achieved with tcp_v4_syn_recv_sock procedure — the same one used when syncookie is disabled. This socket ends up in an accept queue the same way either. For now, it’s still in an TCP_SYN_RECV state.

The three-way handshake completes similar to the previous section — with tcp_child_process procedure invoked for a child socket.

In closing

So that’s about it. This topic is extensive indeed, I have highlighted only those details that were of particular interest to me. Hopefully, there will be some more posts about intricacies of TCP implementation.

UPD: an original version of this post contained a mistake in a syncookies explanation. Thanks to the noble and benevolent Hacker News souls who pointed it out — in a kind and gentle manner that we all lack in the darker places of the Internet.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 💰 Free coding interview course ⇒ View Course

- 🧠 AI Tools ⇒ View Now

🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job