Member-only story

|TRANSFORMER|KOLMOGOROV-ARNOLD NETWORK|MLP|AI|

Kolmogorov-Arnold Transformer (KAT): Is the MLP Headed for Retirement?

Exploring how the Kolmogorov-Arnold Transformer (KAT) challenges the MLP dominance in modern deep-learning

Wisdom and penetration are the fruit of experience, not the lessons of retirement and leisure. Great necessities call out great virtues. — Abigail Adams

Transformers are the most successful architecture in deep learning, dominate text generation (and all other NLP tasks), and are now often used in other fields such as computer vision. Despite this dominance, the transformer is not without its flaws and the search for new architectures. One of the biggest flaws is the quadratic cost of the attention mechanism. Today, efforts are being made to find a lighter alternative.

The transformer consists not only of the attention mechanism but also of multi-layer perceptrons (MLPs). MLPs have been popularized by the fact that they can theoretically approximate any function (provided there are enough neurons). In practice, even MLPs have flaws:

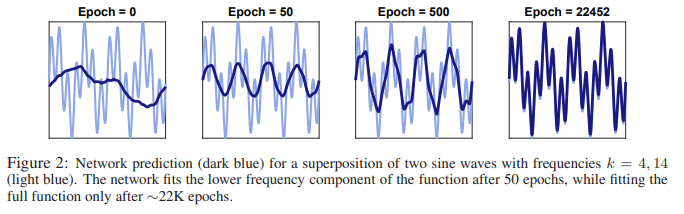

- An MLP struggles to approximate a periodic function.

- Training MLPs requires long convergence in practice, especially for functions with high frequency.

Despite these limitations, there have been few attempts to supplant MLPs in general (and in the transformer).