Somewhere Over the Gradient

When a model’s gradients are unavailable or costly.

At the top of the list of “most important algorithms in history” one would undoubtedly find gradient descent. Constituting the underpinning of backpropagation, this algorithm is at the heart of today’s boom in AI.

The term “gradient” has several meanings in mathematics, the simplest being a “slope”. In deep learning, the gradient is a vector that represents the direction of the loss function’s steepest ascent. Subtracting the gradient vector from the weights vector results in steepest descent, i.e., minimization of the loss function — which is the ultimate goal.

However, there are scenarios where gradients are not readily available or are computationally expensive to obtain.

So what can you do if you don’t have access to gradients?

Fear not! You have recourse to several options.

Random Search and Grid Search

Random search is exactly what it sounds like — just search… randomly: “Random search (RS) is a family of numerical optimization methods that do not require the gradient of the problem to be optimized...” (Wikipedia)

One primary use of random search is in hyperparameter tuning (or optimization), which is the problem of choosing a set of optimal hyperparameters for a learning algorithm.

Here’s a basic example of random search used to tune hyperparameters for a Support Vector Classifier:

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.model_selection import RandomizedSearchCV

from scipy.stats import uniform

# Load the Iris dataset

X, y = datasets.load_iris(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Define the model

model = SVC()

# Define the hyperparameter distribution

hyperparams_random = {

'C': uniform(0.1, 100), # Continuous distribution for C

'kernel': ['linear', 'rbf', 'poly'], # Discrete choices for kernel

'gamma': ['scale', 'auto'] # Discrete choices for gamma

}

# Perform random search

random_search = RandomizedSearchCV(model, hyperparams_random, n_iter=100)

random_search.fit(X_train, y_train)

# Print the best hyperparameters

print("Random Search - Best Parameters:", random_search.best_params_)

# Evaluate the model

random_search_accuracy = random_search.score(X_test, y_test)

print("Random Search - Test Accuracy:", random_search_accuracy)Note that we’ve given the random-search process a “budget” of points to sample in the hyperparameter space: n_iter=100.

A close “cousin” of random search is grid search, which searches exhaustively through a pre-specified subset of the hyperparameter space. Here’s the added code for the same example we’ve just shown:

from sklearn.model_selection import GridSearchCV

model = SVC()

# Define the hyperparameter grid

hyperparams_grid= {

'C': [0.1, 1, 10, 100], # Discrete choices for C

'kernel': ['linear', 'rbf', 'poly'], # Discrete choices for kernel

'gamma': [0.01, 0.1, 1, 'scale', 'auto'] # Discrete choices for gamma

}

# Perform GridSearchCV

grid_search = GridSearchCV(model, hyperparams_grid)

grid_search.fit(X_train, y_train)

# Print the best hyperparameters

print("Grid Search - Best Parameters:", grid_search.best_params_)

# Evaluate the model

grid_search_accuracy = grid_search.score(X_test, y_test)

print("Grid Search - Test Accuracy:", grid_search_accuracy)Evolutionary Algorithms

Evolutionary Algorithms apply core concepts from evolutionary biology — inheritance, random variation, and selection — to solve complex computational problems. An initial population of candidate solutions (represented by “genomes”) is generated (usually at random), after which the algorithm cycles through a fitness-selection-variation loop, until an acceptable solution is found. Each iteration is know as a generation.

Evolutionary Algorithms present several important benefits over popular machine learning methods, first and foremost being the ability to handle no or approximate gradients. Other benefits include: ability to handle design problems, where the objective is to design new entities from scratch; fewer required a priori assumptions about the problem at hand; seamless integration of human expert knowledge; ability to solve problems where human expertise is very limited; support of interpretable solution representations; and support of more than one objective, so called evolutionary multiobjective optimization.

I’ve personally used evolutionary algorithms quite beneficially in conjunction with deep networks, specifically in the area of adversarial attacks: the manipulation of input data — often imperceptibly to humans — to provoke a model into producing incorrect outputs or other unintended consequences. The focal point of this research was so-called black-box scenarios.

The black box is the deep network under attack, meaning the attacker has no knowledge of its internals (weights, gradients, etc.). This is quite a realistic scenario because attackers in real life (either accidental or intentional) more often than not have no access to the model itself — only to its outputs.

With judicious use of evolutionary algorithms you can circumvent the no-access-to-gradients situation.

Another example is given here:

In the context of Large Language Models (LLMs), “jailbreaking” refers to the careful engineering of prompts to exploit model biases and generate outputs that may not align with their intended purpose. Even if you have access to the LLM’s gradients, the computational cost of jailbreaking would be prohibitive. You can circumvent this cost through clever use of an evolutionary algorithm.

Bayesian Optimization

Bayesian optimization is a technique used for optimization of black-box functions. It iteratively builds a probabilistic model that guides the search process intelligently.

Bayesian optimization is often used in hyperparameter optimization, since it is usually more effective and efficient than either random search or grid search. Given a budget of calls to the (black-box) function, it will strive to make each call count.

Hyperparameter Tuning

This isn’t really a “thing” unto itself, since it employs previous methods, but it’s worth mentioning separately given the importance of hyperparameters in machine learning.

There are several great hyperparameter-tuning packages out there, most notably: Optuna, Hyperopt, and scikit-optimize.

I’m familiar with the Optuna package, having put it to good use many times. At its core are two optimization algorithms we discussed above: Bayesian and evolutionary.

A neat “trick” can be had when you notice that it’s really up to you to define what exactly constitutes a hyperparameter. For example, a hyperparameter need not confine itself to one type of model — the model itself can be a hyperparameter! Indeed, this is demonstrated in one of Optuna’s code examples, where the choice of model — SVC or Random Forest — is defined as a hyperparameter and “handed over” to Optuna, which will figure out the one that works best:

import sklearn

import optuna

# 1. Define an objective function to be maximized.

def objective(trial):

# 2. Suggest values for the hyperparameters using a trial object.

classifier_name = trial.suggest_categorical('classifier', ['SVC', 'RandomForest'])

if classifier_name == 'SVC':

svc_c = trial.suggest_float('svc_c', 1e-10, 1e10, log=True)

classifier_obj = sklearn.svm.SVC(C=svc_c, gamma='auto')

else:

rf_max_depth = trial.suggest_int('rf_max_depth', 2, 32, log=True)

classifier_obj = sklearn.ensemble.RandomForestClassifier(max_depth=rf_max_depth, n_estimators=10)

...

return accuracy

# 3. Create a study object and optimize the objective function.

study = optuna.create_study(direction='maximize')

study.optimize(objective, n_trials=100)Finetuning

Finetuning takes a pretrained model and further trains it on a new task or dataset. This can be done, for example, by keeping most of the model layers “frozen” and retraining only the final layers. We’re therefore only accessing a small part of the gradients, which keeps costs under control.

When it comes to LLMs, there seems to have arisen of late an entire cottage industry of finetuning.

Further, with LLMs we can modify the way we interact with the model — the prompt — so as to improve results without any tuning whatsoever. Few-shot prompting provides the LLM examples with detailed answers to questions, showing the model how to work through a problem. Chain-of-thought prompting tells the model to reason by adding phrases like “Let’s think step by step”.

Speaking of LLMs, let me also mention Parameter-Efficient Fine-Tuning (PEFT) and Low-Rank Adaptation (LoRa). PEFT methods fine-tune a small number of model parameters, significantly decreasing computational and storage costs, with performance comparable to a fully fine-tuned model. LoRA represents the weight updates with two smaller matrices through low-rank decomposition.

Surrogate Model

A surrogate model aims to generate a new model that approximates the original model, whose gradients are inaccessible or expensive. Usually we aim for the surrogate model to be simpler than the original.

The main point to note is that when training the surrogate model we only need the original model to supply input-output pairs: no internals, no gradients. (Note: The surrogate model will need training — and gradients.)

Reinforcement Learning

Lastly, let me mention reinforcement learning, where gradients may not be available due to non-differentiable or noisy environments. There is by now a hefty bag of tricks to deal with such situations.

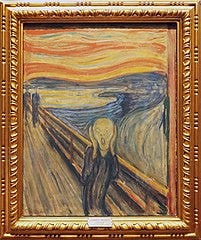

In Lewis Carroll‘s Alice in Wonderland Alice asks the Cheshire Cat for directions.

“Would you tell me, please, which way I ought to go from here?”

“That depends a good deal on where you want to get to,” said the Cat.

“I don’t much care where — ” said Alice.

“Then it doesn’t matter which way you go,” said the Cat.

“ — so long as I get somewhere,” Alice added as an explanation.

“Oh, you’re sure to do that,” said the Cat, “if you only walk long enough.”

As we’ve seen, even without directions, er, I mean gradients — we can get somewhere…